Linkedin updates its terms of use, stipulating that users must verify the reliability of content generated by the platform’s AI tools before publishing it. The social network declines all responsibility in the event of incorrect information.

Linkedin disclaims any responsibility regarding the generative AI tools offered on its platform. The social network for professionals has published its new conditions of use, which will take effect on November 20.

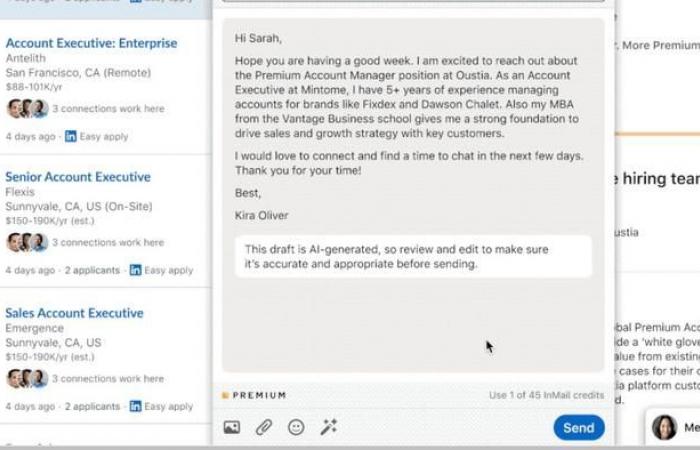

“The content generated may be inaccurate, partial, delayed, confusing or inappropriate for your purposes. Please review and edit this content before sharing it with others. Like all content you share on our Services, it is your responsibility to ensure that it complies with our Professional Community Policies, including refraining from sharing confusing information,” reads about the generation features of Linkedin content.

Clearly, users are required to verify and correct erroneous information before sharing it, because the social network will not be held responsible for the consequences, summarizes The Register, which spotted these new conditions of use. Contacted by the specialist media, a spokesperson neither denied nor confirmed this interpretation.

Automation tools for recruiters and job seekers

Linkedin has already been offering its premium subscribers new features using GenAI for several months. These include a job search method by conversing with a chatbot, or even assistance tools for writing a cover letter, responding to an email or reworking a CV. Additionally, these capabilities allow recruiters to improve job descriptions and users to benefit from assistance with certain sections of their profile.

Microsoft, the parent company of Linkedin, also recently adapted its conditions of use. Noting in particular that these tools are not intended to be used as a substitute for professional advice.

The legal risks faced by companies deploying these technologies are real. The Canadian airline was, for example, found responsible for incorrect advice provided by its chatbot to a passenger. In Switzerland, companies are also taking precautions. Like Helvetia, with its Clara chatbot (powered by GPT-4) which, in its conditions of use, disclaims any guarantee or liability as to the accuracy, completeness or timeliness of the information made available » by its chatbot.

#Swiss