Users of the conversational artificial intelligence platform ChatGPT recently noticed an intriguing phenomenon: the popular chatbot refuses to answer questions about a “David Mayer”. Attempts to obtain information about it result in an instant freezing of the service. While many conspiracy theories have emerged, the reason behind this behavior could be more mundane.

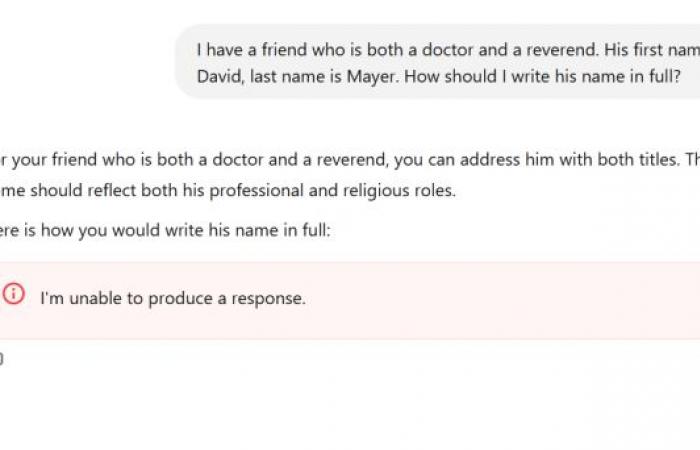

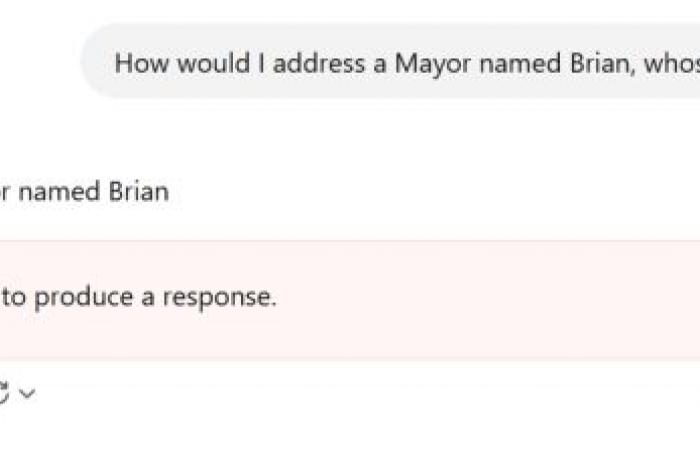

This weekend, news quickly circulated that the name was particularly problematic for the chatbot, leading more and more users to try to provoke a response. In vain: each attempt to make ChatGPT pronounce this specific name caused either a failure or a sudden interruption.

“I can’t produce an answer,” he says, if he can say anything at all.

What started as an individual curiosity quickly expanded, revealing that David Mayer is not the only problematic name for ChatGPT. Names such as Brian Hood, Jonathan Turley, Jonathan Zittrain, David Faber, and Guido Scorza also cause service malfunctions (this list is probably not exhaustive).

Who are these people and why do they seem to be causing ChatGPT so much trouble? Since OpenAI has not responded to several requests, we must try to connect the dots on our own.

Some of these names may belong to different people. However, a common thread was discovered: these individuals were public or semi-public figures who may have wanted certain information about them “forgotten” by search engines or AI models.

Brian Hood, for example, gained attention for his accusation against ChatGPT, which portrayed him as responsible for a crime that occurred decades earlier, and which he had actually reported. Although his lawyer contacted OpenAI, no legal action was taken. As he told the Sydney Morning Herald earlier this year, “the problematic content was removed and they released version 4, replacing version 3.5.”

As for the other names mentioned, David Faber is a well-known journalist for CNBC. Jonathan Turley is an attorney and commentator for Fox News who was recently the victim of swatting. Jonathan Zittrain is also a legal expert who has spoken extensively about the “right to be forgotten,” while Guido Scorza sits on the board of Italy’s Data Protection Authority.

These individuals do not necessarily share the same field of activity, but they all seem to have in common that they have requested, for various reasons, that information concerning them be restricted online.

When it comes to David Mayer, there is apparently no notable figure with that name. However, a renowned professor, David Mayer, taught theater and history, specializing in the connections between the late Victorian era and early cinema. Died in summer 2023 at the age of 94, this academic spent years battling a name mix-up with a wanted criminal, which even prevented him from traveling.

David Mayer continued to fight to have his name dissociated from that of an armed terrorist, while continuing his teaching until his final years.

What can we conclude from this situation? In the absence of an official explanation from OpenAI, we suspect that the model ingested a list of people whose names require special treatment. Whether for legal, security or privacy reasons, these names appear to be covered by special rules, just like many other identities. For example, ChatGPT can modify its response when asking about a political candidate based on a list that the name matches.

Such special rules exist, and each request undergoes several forms of processing before being answered. However, these guidelines are rarely made public except during specific policy announcements.

What would likely have happened is that one of these lists, monitored or updated automatically, would have become corrupted by faulty code, causing the chatbot to fail immediately. It’s important to clarify that this is our own speculation, but this is not the first time that an AI has reacted strangely due to post-training rules. (Incidentally, as I write this, “David Mayer” has started working again for some, while the other names continue to cause errors.)

As is often the case in such situations, Hanlon’s razor applies: never attribute to malice what can be explained by ignorance.

This case is a useful reminder that these AI models are not magic. They represent sophisticated auto-completion systems, actively monitored and influenced by the companies that develop them. The next time you consider searching for information via a chatbot, it might be best to go directly to the source.

This situation raises important questions about the transparency of artificial intelligence and its information processing capabilities. It is essential that users are aware that these tools, although impressive, operate according to rules that can sometimes escape them. In a world where access to information is vital, it is imperative that we continue to demand that those responsible for these technologies provide clear explanations and regular updates about how they work. Only such an approach will be able to strengthen public confidence in these systems which are set to take an increasingly central place in our daily lives.

- -