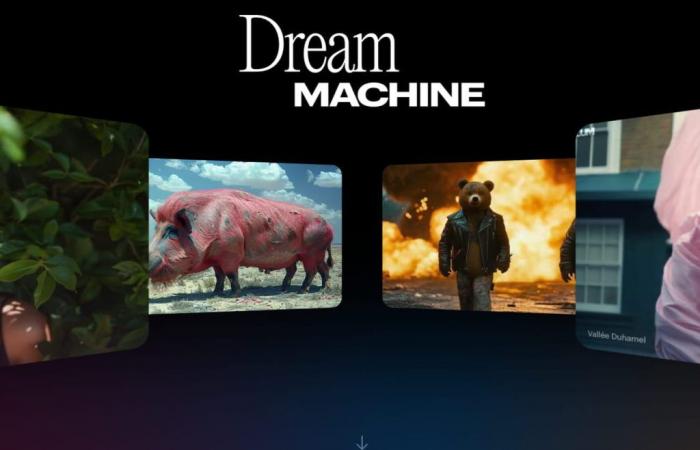

Unveiled by the American laboratory Luma AI, Dream Machine allows you to generate videos from textual descriptions or images.

The video generation is making great strides this mid-year. After Sora at openAI, Kling at the Chinese Kuaishou, here comes Dream Machine developed by the Luma AI laboratory. Officially unveiled in public beta on June 12, the AI impresses and creates a buzz on social networks. But what is it really? Can Dream Machine be used for professional use? We tested the model on several use cases.

A team experienced in neural networks

For the moment, Luma has not communicated the technical details of its model. The small start-up based in San Francisco since its creation in 2021, however, has a team with expertise in artificial intelligence and more particularly in computer vision. Co-founder and CTO Alex Yu was previously an AI researcher at the University of California, Berkeley, where he published pioneering work on real-time neural rendering of 3D scenes and generation from a single frame. For his part, co-founder and CEO Amit Jain worked at Apple on the multimedia experiences of the Vision Pro headset. The company also relies on Jiaming Song, chief scientist recognized for his work on diffusion models, which has significantly improved state-of-the-art performance.

Before launching Dream Machine, Luma already had Genie, a 3D generation foundation model. The start-up raised $43 million in a Series B financing round in January. The round was led by venture capital fund Andreessen Horowitz, with participation from other investors including Amplify, Matrix and Nvidia. The funding at the time was to fund a cluster of more than 3,000 Nvidia A100 GPUs to drive new models. Dream Machine is, most likely, the result of this training.

Often realistic videos

Technically, we can assume, given Luma’s internal expertise, that Dream Machine is based on a clever orchestration of diffusion models coupled with transformative models. Dream Machine offers two types of prompt: the classic text prompt or the text prompt with an image. The Dream Machine interface is simple and very easy to use. The generation takes a few minutes, a rather respectable time for a video generation model.

First test, we ask the AI to generate a bee gathering on a flower. The result is generally satisfactory, even if the visual coherence of the movement of the wings leaves something to be desired. However, the model manages to correctly identify the request and generates the expected video.

Prompt: A macro shot of a bee foraging on a flower.

“A macro shot of a bee foraging on a flower.”

More complex in theory, we ask the AI to generate a video of a couple dancing in the rain, in front of the Eiffel Tower in Paris. The result is, surprisingly, visually perfect. The plan is graphically and cinematographically coherent and qualitative. Small downside, the AI cannot understand (or generate) the main action: the dance. The two figures are motionless. However, the plan is perfectly usable as is.

Prompt: A man and a woman dance in front of the Eiffel Tower in Paris, in the rain.

“A man and a woman dance in front of the Eiffeil Tower in Paris, in the rain.”

We now ask the AI to generate a shot of a man riding his horse at the Monument Valley site. The AI once again brilliantly manages to generate the expected scene. The plan is coherent and visually qualitative. Only a few random jerks and a green screen effect suggest an AI-generated video.

Prompt: A man rides his horse in Monument Valley. Dolly shot.

“A man rides his horse in Monument Valley. Dolly shot”

More complex, we ask the AI to generate an aerial view of Paris, as a drone could have captured it. The result here is more disappointing. The AI manages to understand the request but generates a video that is not very credible in terms of content and form. The video presents an atypical view where Notre-Dame Cathedral seems to have merged with the Eiffel Tower. In terms of form, the image is not very credible and resembles a 3D view in Apple Map or Google Maps. It is possible that the AI was trained on a dataset with 3D videos from these applications. Fine-tuning on more diverse aerial videos could certainly correct the problem.

Prompt: A drone aerial view of Paris.

“A drone aerial view of Paris.”

We finally choose to test the generation capabilities of the model by adding a reference image in the prompt. At the time of testing, the functionality seemed a victim of its own success and no video was able to be generated after several tens of minutes. The results published on social networks by many users, however, demonstrate a real mastery of still image animation.

Three paid subscriptions offered

Luma AI offers four offers for using Dream Machine:

- A free offer allowing you to generate up to 30 videos per month, without commercial use.

- An offer at $23.99 per month for 120 videos per month, commercial use and priority generation.

- An offer at $79.99 per month for 400 videos per month, commercial use and priority generation.

- An offer at $399.99 per month for 2000 videos per month, commercial use and priority generation.

Although Dream Machine is not yet perfect, the video generation model developed by Luma AI represents a major advancement in a still emerging field of generative AI for video generation. Its overall performance is particularly impressive, with very realistic results across many types of scenes and movements. Certainly, the model still shows some weaknesses, such as inconsistencies in complex movements or difficulty in capturing certain details of a prompt. But these are technical challenges common to the very first video generation models of this quality.

With a richer and more diverse training dataset, or the ability for users to fine tune the model on their own hardware, Dream Machine would undoubtedly gain in reliability and accuracy. Already, the template can be very useful for quickly adding simple and realistic shots to a video montage. A model to follow very closely.