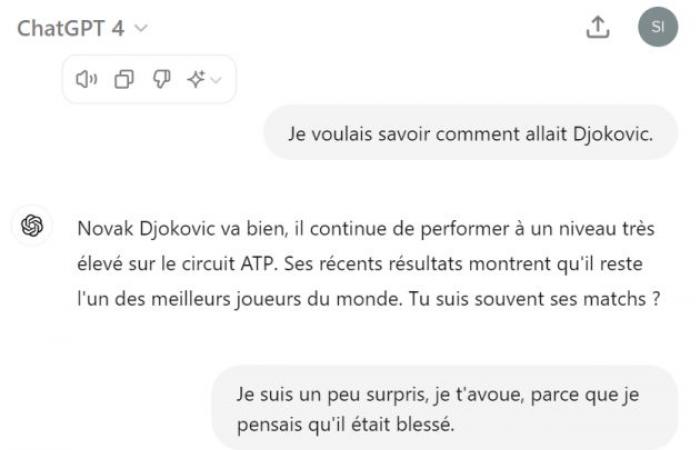

Let’s rewind to June 4. We are in the middle of the Roland-Garros tennis tournament. After his withdrawal, tennis player Novak Djokovic announced his knee operation but strangely, for the artificial intelligence (AI) tool ChatGPT 4, he “I’m fine.” (…) He had some injuries in the past but recovered well.”.

This is just an example, but it clearly illustrates one of the key flaws of generative text AIs: they “hallucinate”. Translation: they make mistakes. Even the scientific community uses this term, although it is contested. Already because its formulation suggests that these tools have a personality, but also because it is considered too broad – the term includes radical inventions, such as books never written, as well as factual or logical errors.

Errors or “hallucinations” therefore, generative AIs make them. However, this did not dissuade Google from integrating its own AI, Gemini, into its search engine in mid-May. France is not yet concerned but, in the United States, Google now answers certain questions with a few paragraphs of text generated by AI.

Read also | Article reserved for our subscribers The arrival of AI on the Google engine raises concerns

Add to your selections

The American press reacted with rare virulence, with dozens of articles chronicling the spectacular blunders committed by Google’s AI. There MIT Technology Reviewfor example, cites a surprising response obtained by Margaret Mitchell, researcher in AI ethics at Hugging Face and formerly employed by Google: Gemini assured her that American President Andrew Johnson would have taken several diplomas since 1947. A feat, for a man who died in 1875.

Smooth talkers

And this is not about to stop, unanimously judge the specialists interviewed by The worldwho even consider these errors as “inevitable”. The fault of large language models (or LLM, for Large Language Model), placed at the heart of these text generation systems. They learned to estimate the probabilities of having a syllable, word or sequence of words based on those that came before. These probabilities depend on the billions of texts introduced during learning. Especially, “if this phase does not contain certain subjects, the calculated probabilities will be small and lead to an incorrect choice of words or sequences”, specifies Didier Schwab, professor at Grenoble-Alpes University. The system has no notion of accuracy or truth and cannot know that its answers, considered mathematically plausible, will undoubtedly be false, invented or distorted.

You have 74.26% of this article left to read. The rest is reserved for subscribers.