NVIDIA and Foxconn collaborated to design the supercomputer IA the fastest in Taiwan, based on servers Blackwell GB200 NVL72.

A Supercomputer In Kaoshiung: The Taiwanese Benchmark in AI Performance

Foxconn, a Taiwan-based electronics manufacturer, announced the start of construction of a supercomputer IA ultra-powerful thanks to a collaboration with NVIDIA. This supercomputer will be built on the architecture Blackwellrenowned for its computing performance.

In a blog post, NVIDIA confirmed this collaboration. The supercomputer will be unveiled during the It’s Tech Day!will integrate the latest platform GB200 NVL72combining processor performance Grace and GPUs Blackwell.

This supercomputer must reach more than 90 exaflops of AI performance, which will make it the most powerful ever seen in Taiwan. This will propel the country as a global leader in AI-driven sectors, facilitating complex operations such as cancer research and the development of advanced language models.

James Wu, VP and spokesperson at Foxconn, said:

The supercomputer IA from Foxconn, powered by NVIDIA’s Blackwell platform, represents a significant advancement in computing and efficiency.

Foxconn adopts a three-way strategy focused on manufacturing, smart cities and electric vehicles. The supercomputer will play a key role in the development of AI-assisted services for urban areas. With the power of GB200 NVL72it will be capable of integrating up to 36 processors Grace and 72 GPUs Blackwell GB200 per rack within a domain NVLink at 72 GPUs.

To read: Intel Lunar Lake CPU: Significantly superior performance to current portable consoles

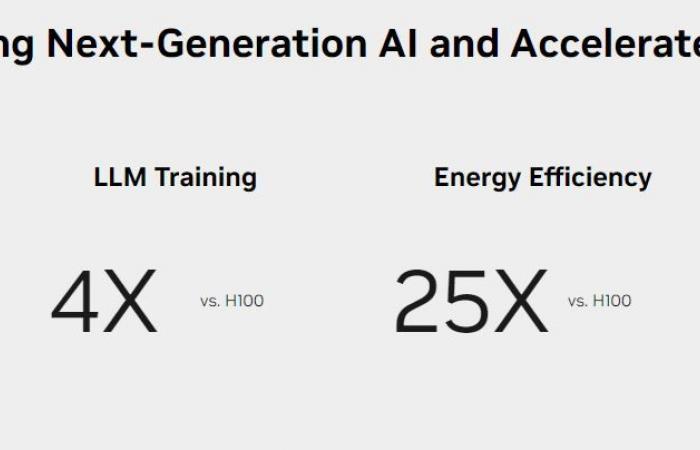

According to NVIDIA, each rack will be able to provide up to 3240 TFLOPS FP64 and FP64 Tensor Core performance, as well as up to 13.5 To from memory HBM3efor a memory bandwidth of 576 To/s. Compared to GPU NVIDIA H100 Tensor Corethe GB200 NVL72 can achieve up to 30x more inferences for LLMs, 4x better training capabilities, and 18x better data processing efficiency.

With a potential of 64 racksthe total will reach 4608 GPU Blackwell GB200intended for high performance calculations. This system is ideal for training large models IA and to manage inference operations on models with several trillion parameters.

The launch of the supercomputer is planned for mi-2025 in the first phase, with full deployment by 2026. It will integrate several NVIDIA technologies, such as NVIDIA OmniverseIsaac for robotics, and the Digital Twins, which will offer a multitude of tools and libraries to optimize manufacturing processes.