The Windows application available to everyone

The application evolved during the testing period. It has a greater number of functions, such as the ability to use the computer’s webcam to take a photo and send it in the conversation. New options have also appeared, such as the choice of shortcut to call the application window (Alt + Space by default) or the possibility of varying the size of the text via the Ctrl + and Ctrl – shortcuts. There is also a button in the settings to check the availability of an update.

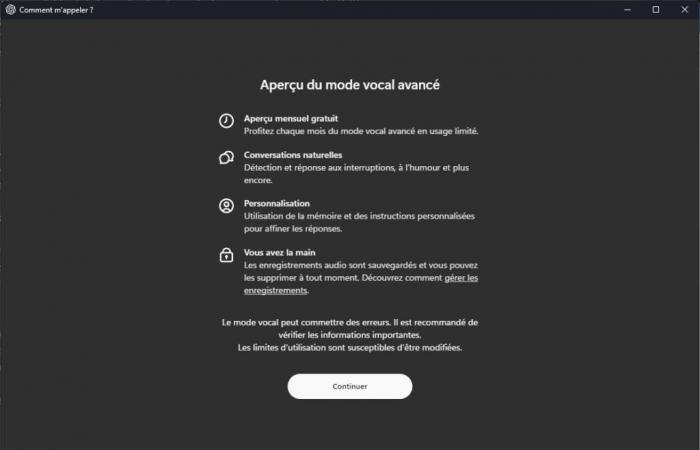

Among other important additions in recent months, the application received a sidebar in which you can search the history of your interactions with ChatGPT. You can of course use the company’s latest models (including 4o), but in a limited way. Same thing for the voice mode, which appeared during the beta: everyone can use it, but there is a limit in the free version, which disappears with the subscription.

Desktop versions can be obtained from the official OpenAI website.

On Mac, ChatGPT can search IDEs

At the same time, the Mac version of ChatGPT opens to other applications. It was released before that for Windows and therefore explores new horizons. As OpenAI announced in a tweet, this opening begins with several integrated development environments: VS Code, Xcode, Terminal and iTerm2.

The company shows some examples in a short video published on However, you must click on the Xcode tab in the application for it to switch to this specific mode. Clicking on the tab allows ChatGPT to “read” the development environment and retrieve information.

In another demonstration given to TechCrunch, an Xcode window contained code for a program modeling the solar system, but with holes. From ChatGPT, the developer requested that the missing code be added to model the missing planets, which the application managed to do. Depending on the case, all the code contained in the main window is sent, or only the last 200 lines. You can also highlight the part of the code that you consider interesting so that ChatGPT prioritizes it for its context.

Only text for now

However, there are several limitations currently, the most important of which is that the code provided by ChatGPT cannot be sent into the environment. So you have to copy it and then paste it to test it. It is therefore difficult for the moment to compare it to much more integrated modules like GitHub’s Copilot or Cursor. Additionally, it is not always possible for ChatGPT to read directly into an environment. In the case of Visual Studio Code, you must install an extension.

The “fault” in the mechanism that the ChatGPT application uses to read information on Mac: macOS’s own screen reader, a system accessibility feature serving as the basis for VoiceOver. As TechCrunch reports, the feature is generally reliable, but sometimes fails to read information. Additionally, it is limited to text. ChatGPT, in its interactions with other applications, can therefore only draw context from the written word.

The feature is called “Work with Apps” and should eventually work with any type of application. Development environments are the first to be targeted because they are one of the most common scenarios for use with generative AI.

The versatile agent, a new frontier?

Interactions with other applications seem to be the next big step. Several approaches are possible. OpenAI seems to be moving more towards agents, but that means working on compatibility on a case-by-case basis.

On Wednesday, Bloomberg indicated that OpenAI was preparing a versatile agent called Operator. It would be planned for 2025 and would have the mission of interfacing with other applications to become a kind of aid for many activities. A jack of all trades which would then compete with other attempts in this field, notably the latest Claude 3.5 Sonnet, which has a new mechanism in beta called Computer Use. The latter, using temporary captures, wants to understand the requests by analyzing what is present on the screen, to then simulate keyboard keystrokes or mouse clicks.

The advantages and disadvantages vary depending on the approach. That of Anthropic with its latest Claude is more general. But according to data published by the company, even its latest model is not yet capable of feats in carrying out tasks, since only 49% of the actions requested on the screen were successful.

OpenAI does not give a figure, but it is likely to be much higher. The Work with Apps mechanism does not use captures and is therefore not dependent on image analysis to understand the context: the information is provided to it by a system API. In return, OpenAI must be content with text, and only from applications whose compatibility has been specifically worked on, where Anthropic can “act” on everything present on the screen. Additionally, OpenAI says nothing about the availability of Work with Apps on Windows.

On the latter, Microsoft has strangely removed capabilities in its Copilot application, as we noted in our article on the major 24H2 update. It’s likely the publisher has plans in store