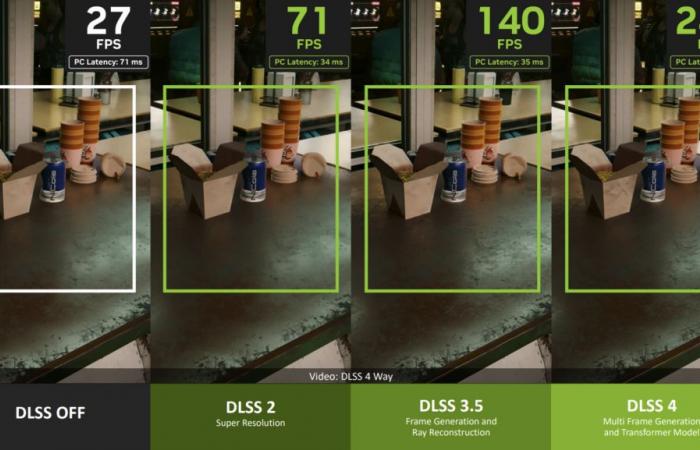

This may be an aberration, but it is an evolution imposed by the physical limits of graphics chips. We've known for quite some time that there was a paradigm shift to gain performance and visual quality. For many reasons (especially historical and simplicity), we continue with triangulation and texture mapping, technologies such as FSR and DLSS make it possible to go beyond the limits of rasterization at a reasonable cost.

Just to illustrate this, launch a very old game, on a modern map, you will see that you don't have much more FPS (maybe between 10x and 100x) than at the time, it's ugly, so that your graphics card is several thousand times more complex and faster. It's just that current technologies that boost performance and improve rendering did not exist at the time (large caches, texture compression, T&L, TXAA, etc.).

We can draw a parallel with processors, are 64 bits, SIMD instructions (MMX, SSE, AVX, etc.) also aberrations?

Same thing for video and audio (probably even closer as cases): are destructive compressions (WMA, MP3, AV1, HEVC, …) aberrant compared to the bitrate gain?