What is still left for the big models? On certain mathematical and arithmetic tests, Microsoft’s new SLM “Phi-4” proves more relevant than the large frontier models GPT-4o, Claude 3.5 or Gemini Pro 1.5!

Long dominated by gigantic architectures accumulating hundreds of billions of parameters, the AI ecosystem is now enthusiastic about the strategic advantages of more compact models. Fast to train, more economical in computing resources and easier to deploy, these “small” models have been required until now in constrained environments, whether industrial applications or tools dedicated to research. or hybrid services. But today, they are essential everywhere, in research laboratories as well as in companies and even in cloud AI as evidenced by the enormous potential of “Gemini 2.0 Flash”, now the multimodal reference model for the assistant Gemini AI.

In 2024, small models have multiplied like hot cakes approaching and sometimes exceeding the capabilities of large models while requiring much less computing and energy resources to infer them, or even while being capable of locally executing equally gifted AI than the major cloud models as long as they are used wisely.

Among these small models, the Phi range from Microsoft has been in the news a lot this year. The first versions of Phi were thus adopted by research teams, independent developers and technology companies keen to find an optimal compromise between performance, speed and cost. Previous iterations, such as Phi-3, demonstrated the ability to maintain satisfactory response quality while limiting the size of the neural network. Users saw this as an opportunity to more easily integrate AI into their products and services, without the heavy infrastructure and energy costs associated with giants in the field.

Phi-4, a small model that reasons

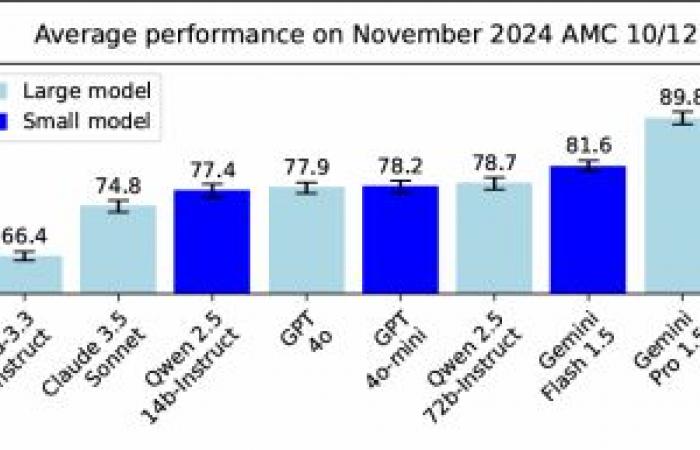

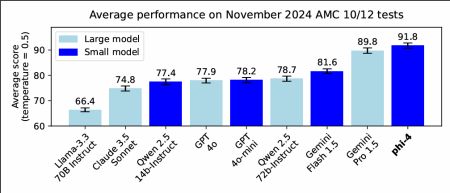

It is in this context that Microsoft has just announced Phi-4a new generation which is distinguished by significant advances, particularly in the field of mathematics. Large frontier LLMs like “Open AI o1” or “Anthropic Sonnet 3.5” have barely introduced reasoning capabilities when such capabilities are already beginning to tumble into the world of small models!

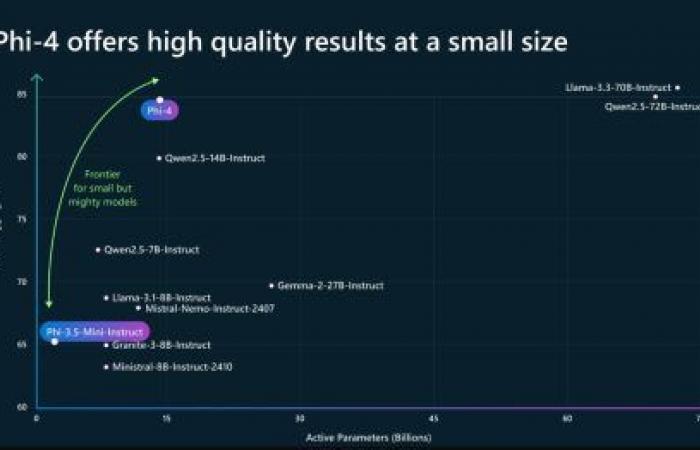

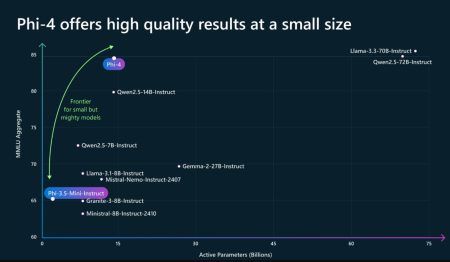

With 14 billion parametersPhi-4 remains a “modest” size model by market standards, but it rises to a remarkable level of performance on demanding evaluations, even outperforming larger models – including Gemini 1.5 or Claude 3.5 Sonnet – in solving mathematical problems!

This success relies on the quality of the carefully chosen training set, the implementation of stricter data cleaning, a careful post-training process (e.g. via rejection sampling techniques, d self-revision, reversal of instructions), all in order to guarantee the credibility of the evaluations and the relevance of the results. In addition, a particular effort was made to avoid the risk of contamination of performance tests by data already seen during the learning phase. This precaution proves crucial to authenticate the real improvement in the model’s capabilities, confirmed by recent and unpublished mathematical tests.

The result is a better trained, sharper Phi-4 model, and able to tackle arithmetic and algebraic problems more confidently and coherently.

The result is a better trained, sharper Phi-4 model, and able to tackle arithmetic and algebraic problems more confidently and coherently.

Obviously, the modest size of Phi-4 remains an obstacle to certain forms of in-depth reasoning or contextual understanding, and the model does not escape the well-known phenomenon of “hallucinations” when the domain addressed is too specific or insufficiently represented in the training corpus.

For now, Phi-4 is available via the Azure AI Foundry platform, under a research license, and should soon join other distribution channels, including Hugging Face. Its availability is part of an effort aimed at democratizing models that are more reasonable in size, simpler to personalize and better suited to various operational contexts. No doubt, we will still hear a lot about small models in 2025…

Source : Introducing Phi-4: Microsoft’s Newest Small Language Model Specializing in Complex Reasoning

Also read:

Google Gemini 2.0: The era of intelligent agents

With Phi-3, Microsoft further improves its pocket-sized Gen AI models

Honey, I shrunk GPT-4o…

Open source AI models move into high gear