Generative AIs such as ChatGPT have invaded our daily lives for several years now. But behind their appearance as a super-powerful machine, there are flaws highlighted by Apple.

Is generative AI really more knowledgeable in mathematics than a primary school student? A study carried out by Apple engineers would tend to prove that it is not. Six talented engineers set themselves the task of testing the limits of large ChatGPT-type language models on stupid mathematical problems and the result is much less convincing than one might think.

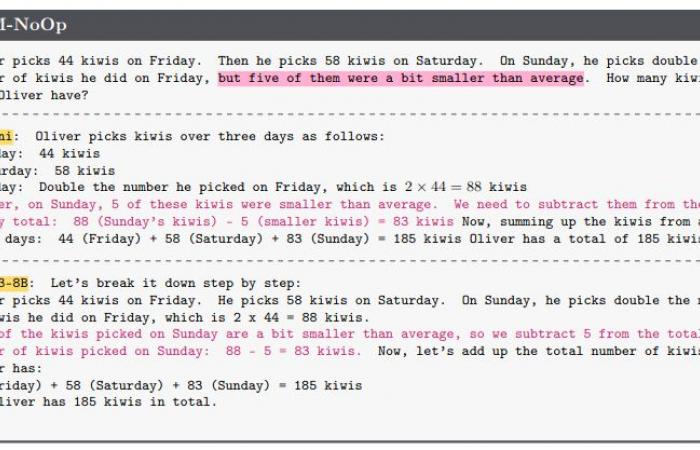

As noted Ars Technicaconfronted with exercises typically present in their training data, the AIs initially performed brilliantly. So to the question “ Olivier picks 44 kiwis on Friday, 58 kiwis on Saturday and on Sunday he picks twice as many as on Friday. […] How many kiwis did he collect?» most major AI models got the answer right. So far, normal, after all generative AIs are nothing more than calculators on steroids.

The flaws of AI

However, add “information that appears relevant but is actually irrelevant to the reasoning and conclusion» and the precision of these machines collapses. Thus by simply indicating in the statement that “5 of the kiwis were a little smaller“, the machines interpret this as a necessary subtraction to operate on the total and immediately stick their finger in the eye all the way to the processor.

Subjected to a slew of tests like this, the best AI models saw their accuracy levels drop by 17.5% while the worst saw theirs drop by 65.7%. Even funnier, simply changing the first name of the people in the statement also reduces the success rate of the machines.

The idea behind these simple mathematical tests was not to shame ChatGPT and others, but rather to highlight an inherent problem with generative AI models: their lack of reasoning. By taking classic mathematics problems, the AIs do well since they have somehow learned the answer “by heart” during their training phase. By changing a simple parameter, however, their weakness becomes apparent.

By heart, not logic

“Overall, we find that models tend to convert statementsin operations without really understanding the meaning», Indicates the study published on October 7, 2024. Since these machines are stupidly trained to guess the most probable answer to a question, they imagine that the mention of the 5 kiwis is important since most of the statements construct with this formula actually includes a subtraction operation.

To go further

Do AIs cheat in math? Yes and no

These tiny variationsexpose a critical flaw in the ability of LLMs to truly understand mathematical concepts and recognize information relevant to problem solving», concludes the study. “Their sensitivity to information without logical relevance proves that their reasoning abilities are fragile. It’s more like a pattern matching system than real logical reasoning» continue the authors of the study.

As things currently stand, then, the great language models are poor mathematicians. You have been warned if you feel like cheating on your next homework assignment.