Key information

- Large Language Models (LLMs) can be used to generate many variations of malicious JavaScript code.

- Attackers can leverage LLMs to modify or obfuscate existing malware, evading traditional detection methods.

- This constant rewriting of malware variants could potentially degrade the performance of malware classification systems.

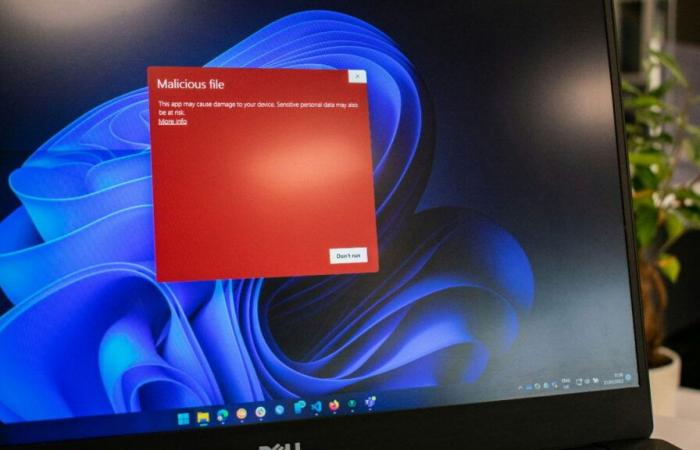

Researchers have discovered that large language models (LLMs) can be used to generate many variations of malicious JavaScript code, making it difficult for detection systems to identify. While LLMs can't easily create malware from scratch, cybercriminals can use them to modify or hide existing malware, evading traditional detection methods.

By requesting specific transformations from LLMs, attackers can produce new malware variants that appear more natural and less suspicious to security software. Over time, this constant rewriting could potentially degrade the performance of malware classification systems, causing them to incorrectly identify malicious code as benign.

WormGPT and malware generation

Despite LLM vendors' efforts to implement security measures and prevent abuse, bad actors have developed tools such as WormGPT to automate the process of creating convincing phishing emails tailored to specific targets and even to generate new malware. This technique involves repeatedly rewriting existing malware samples using various methods such as variable renaming, string splitting, and code reimplementation.

Each iteration is fed back into the system, resulting in a new variation that retains the original functionality while often significantly reducing its maliciousness score. In some cases, these rewritten variants even escape detection by other malware scanners when uploaded to platforms such as VirusTotal.

Advantages of LLM-based obfuscation

LLM-based obfuscation has several advantages over traditional methods. It produces more natural-looking code, making it harder to detect compared to techniques used by libraries like obfuscator.io. Additionally, the volume of new malware variants generated by this process poses a significant challenge for security researchers and developers.

Despite these challenges, researchers are also exploring ways to leverage LLMs to improve the robustness of ML models. Using LLM-generated adversarial examples as training data, they aim to create more resilient systems capable of identifying and mitigating sophisticated threats.

If you want access to all articles, subscribe here!

World