When 14 billion parameters surpass five times larger models … The evolution of SLMs crosses a strategic course with the introduction of “Phi-4” compact models capable of advanced reasoning, low latency, reflection and performance comparable to massive LLM.

Microsoft may be a very privileged partner of Openai and work with most of the players to integrate their AA models in Azure Ai Foundry, the publisher does not refrain from continuing his own technological tracks, by working on innovations at the heart of neural networks (like his amazing Bitnet B1.58 model based on trit), on its own SLM in open source secrets secret (May-1 project).

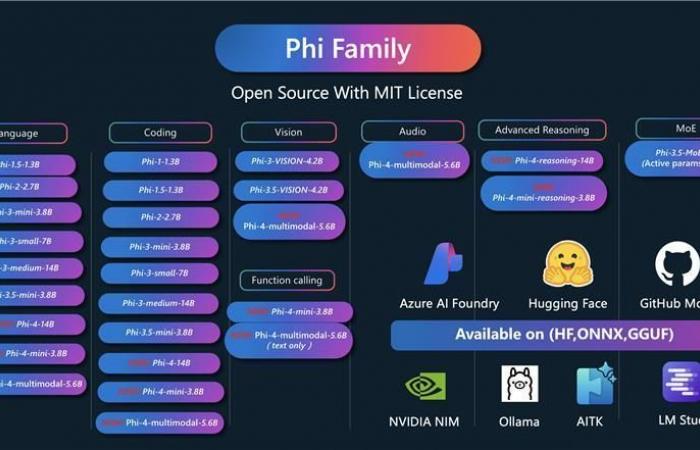

A year after inaugurating its range of small AI models (SLM)Phi‑3 and two months after inaugurating generation 4 with a multimodal SLM (Phi-4-Multimodal) and a tiny model ( Ph-4-min), Microsoft announces three new variants of its latest generation SLM: Phi‑4‑reasoning, Phi‑4‑reasoning‑plus etPhi‑4‑mini‑reasoning.

Published on April 30, 2025, these “integrated reasoning” versions widen the open -weight offer of compact models intended for developers who must maintain a low latency while demanding a complex reasoning.

At the heart of Microsoft’s engineers’ approach to make your SLMs “reasoning”: based on fine supervision (SFT) from the reasoning channels from Openai O3 – Mini, and exploit a strengthening by strengthening (RL) for “more” declination. “”Thanks to distillation, strengthening learning and high quality data, these models reconcile size and performance“Explains Microsoft.

Small but gifted

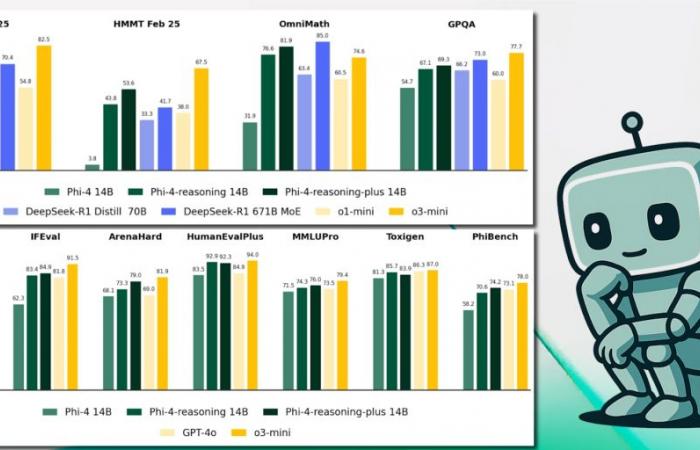

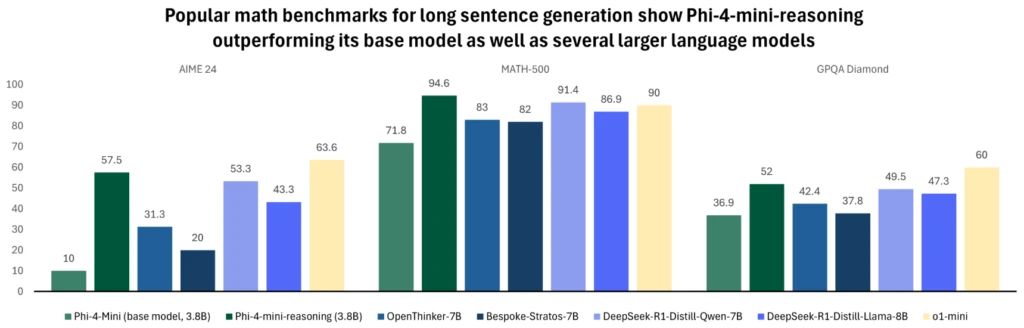

And the results to the various main benchmarks on the market have something to make the competition pale: typically with only 14 billion parameters, Phi‑4‑reasoning Devance Deepseek – R1 -Distill -Llama – 70b (70 billion parameters) on the series likes 2025, MMLU -PRO or HUMANEVAL – Plus, and is approaching the complete Deepseek – R1 model (671 billion parameters)! The variant Phi-4-reasoning-plusaligned on the same 14 MD of parameters but trained with 1.5 times more tokens, borders on the scores of Openai O3 – Mini on Omnimath! For information, Phi‑4‑reasoning benefits from a classic 128,000 tokens contextual window which was extended to 256,000 tokens for the version Phi-4-reasoning-plus.

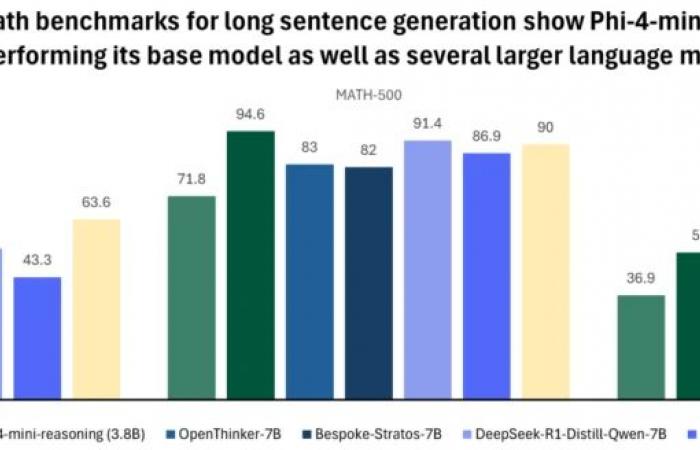

Designed for embedded, Phi‑4‑mini‑reasoning Displays 3.8 billion parameters, a synthetic game of one million mathematical problems generated by Deepseek -R1, and achieves the performance of O1 -MINI on Math – 500 while exceeding several models of 7 to 8 billion parameters.

With its most tiny size, this model are ideal for local execution, including on mobile terminals and to meet the needs of almost immediate response. It is particularly suitable for educational uses and local chatbots.

Models open to various uses

On the deployment side, the CIOs will find these models already optimized for Copilot+ PCs: the NPU “Phi Silica” variant is preloaded in memory and provides almost instantaneous periods, guaranteeing energy -efficient cohabitation with business applications. Windows APIs allow you to integrate the offline generation in Outlook or in internal tools.

On the safe level, Microsoft claims a pipeline aligned with its principles of responsibility – responsibility, equity, reliability, security and inclusion. The models undergo a post -training combining SFT, Direct Preference Optimization and RLHF from public and internal games oriented “Helpfulness/Harmlessness”. Microsoft also publishes the “cards” of its models which detail the residual limits and attenuation measures.

Available now on Azure Ai Foundry, Hugging Face and Github Models, the three models are published under the very permissive MIT license, opening the way to local inference as with hybrid cloud deployments. For security and architecture teams, this new generation of SLM offers a credible alternative to massive LLMs, with reduced TCO, local execution as in EDGE and increased data control. These models have been proof of the incredible progress made by SLM for a year and their astonishing potential in a universe in search of AI less expensive and more energy -fired in energy and resources.

The whole family of “Phi” models of Microsoft in full…

Read also:

Reasoning models: the new AI border

Read also:

The company in the era of agentic AI, seen by Microsoft

Read also:

In fact, reasoning AIs can hallucinate more than conventional AI