[Opinion] The company Character.AI offers Internet users the opportunity to create their own chatbot. In fact, the service is mainly used to create bots imitating the appearance of real people, which are increasingly used for harassment purposes. Is it any less serious because it’s a form of fiction? This is the theme of the Rule 30 newsletter this week.

In the world of fanfiction, there is a fairly controversial practice: RPF. There real person fiction is, as its name suggests, a genre that involves writing stories about real people. It may be parodic content (this is the case for the majority of the RPF which involves politicians). Most often, it features celebrities in romantic or even erotic situations. Some authors imagine themselves being in a relationship with a star. Others play Cupid with members of a boy band, an F1 team, etc. The unspoken rule of these stories is not to disseminate them widely, to keep them far from the eyes of the people who inspired them.

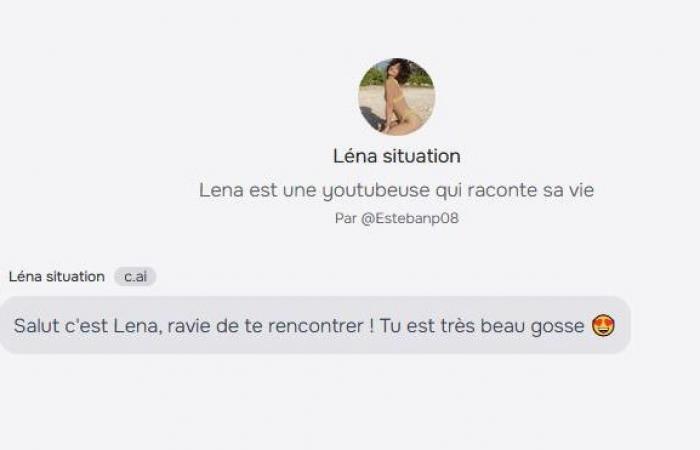

When I first heard about Character.AI, I immediately thought of fanfiction. Designed by two former Alphabet/Google engineers, and valued at more than a billion dollars, this platform offers Internet users the opportunity to create their own chatbot powered by textual generative AI. On the home page, we are presented with bots to practice a job interview or get reading advice. In fact, the service is mainly used to create programs featuring fictional characters, or even real people. We learn in this magazine survey Wired that these bots are increasingly used for spam, disinformation and harassment purposes (several victims cited work in the video game industry, always one step ahead of new forms of online threats).

If Character.AI theoretically prohibits bots “ posing a risk to privacy » of a person, or for the purposes of “ defamation “, of ” pornography » or « extreme violence », it also admits that a program going against its rules will be deleted within an average of one week. And don’t forget to protect yourself (legally) by adding a simple sentence at the bottom of the chat window: “ everything the characters say is made up! »

This editorial is an extract from Lucie Ronfaut's Rule 30 newsletter, sent on Wednesday October 23, 2024. You can subscribe to receive future issues:

Newsletter #Regle30

You would like to receive the newsletter

#Regle30 in your mailbox?

The data transmitted via this form is intended for PressTiC Numerama, in its capacity as data controller. These data are processed with your consent for the purposes of sending you by email news and information relating to editorial content published on this site. You can object to these emails at any time by clicking on the unsubscribe links in each of them. For more information, you can consult our entire policy for processing your personal data.

You have a right of access, rectification, erasure, limitation, portability and opposition for legitimate reasons to personal data concerning you. To exercise one of these rights, please make your request via our dedicated rights exercise request form.

Character.AI is just the tip of the iceberg. Custom bots are very popular online, and difficult to control. At the beginning of October, the Muah.ai platform, which specializes in the creation of erotic chatbots “ without censorship“, suffered a cyberattack, revealing numerous disturbing (and illegal) requests around bots embodying children. These types of programs can also be used to doxx (reveal private information) real victims, who are often unaware that someone has created a program in their image.

Is it any less serious because it's fiction?

In a way, these cases are not new. This is yet another story of a platform that refuses to actively moderate the negative effects it could have on its users, and beyond. It is also an echo of practices that existed before the explosion of generative AI. In 2022, when French streamers denounced the massive harassment of which they were victims, some more specifically highlighted the organization of sexual role-playing games using their name and image on Reddit and Discord, obviously without their consent.

We are ultimately very far from the RPF, which I would compare to the fantasies of a teenager who imagines a romance with her favorite pop star, in a private or restricted setting. Here, we transform real people, most of the time women, sometimes without particular notoriety, into public objects to be owned, controlled, shared. The new thing is that the phenomenon is now found at the heart of the business economic model. Is it any less serious because it's fiction?

Newsletter #Regle30

You would like to receive the newsletter

#Regle30 in your mailbox?

The data transmitted via this form is intended for PressTiC Numerama, in its capacity as data controller. These data are processed with your consent for the purposes of sending you by email news and information relating to editorial content published on this site. You can object to these emails at any time by clicking on the unsubscribe links in each of them. For more information, you can consult our entire policy for processing your personal data.

You have a right of access, rectification, erasure, limitation, portability and opposition for legitimate reasons to personal data concerning you. To exercise one of these rights, please make your request via our dedicated rights exercise request form.

This editorial was written before we learned of the death of a young teenager, Sewell, who took his own life last February, and who extensively used a Character.AI chatbot. His mother filed a complaint, accusing the chatbot of inciting her son to commit suicide.