As criticized as it is praised, the generative artificial intelligence tool ChatGPT has continued to fascinate since its general public launch two years ago. Despite its errors and limitations, many Quebecers now use it as a “personal assistant” in their daily tasks. Duty wanted to check how this new kind of collaboration was going.

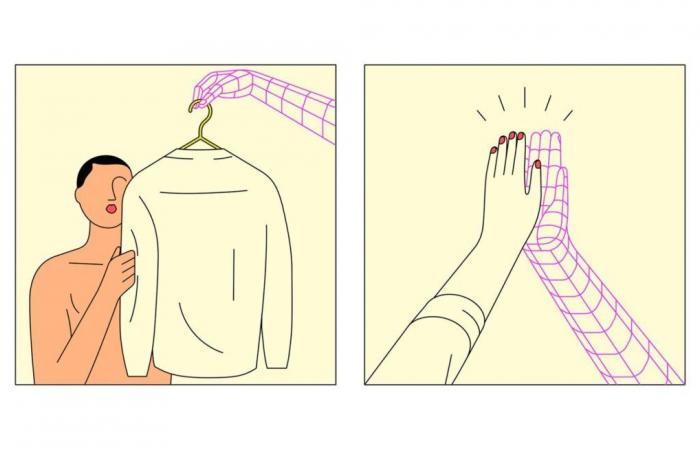

Imagine having a personal assistant to answer your questions, make your grocery list, plan your outings and make decisions for you. Could this dream come true with the constant improvement of ChatGPT launched two years ago? To judge, I entrusted the organization of my daily life to OpenAI’s conversational tool for three days, without (too much) questioning its choices.

“It’s a great idea and a fun experience!” » ChatGPT immediately tells me, delighted by my proposal.

The experience begins on a Sunday morning. I ask for his help in planning my tasks for the day: grocery shopping, cleaning, cooking, laundry, taking a yoga class and making progress on my book for my book club. The program already makes me dizzy, but in just a few seconds, ChatGPT efficiently arranges it hour by hour.

He alternates household chores and distracting activities, allowing enough time for everything, except for lunch, which I am supposed to prepare and eat in… 15 minutes. Dinner has disappeared from the schedule and I obviously have shower time. On the other hand, he included “a short walk” of 30 minutes and suggested that I relax in front of a film before sleeping. Oh yes, screen time to get some sleep?

“You have to be precise, give him as many details and criteria as possible so that he understands the request,” Gauthier Gidel, who works for Mila, the artificial intelligence institute of Quebec, explains to me. “It takes time and practice to use ChatGPT well. »

Lesson learned. “Can you make a grocery list to cook six portions of leek soup for my lunches?” » We couldn’t be clearer. The list is written without forgetting anything at great speed on my screen. I add: “Which grocery store in the neighborhood offers the lowest prices? » ChatGPT recommends IGA. The local fruit store, however, has unbeatable prices.

“There is a notion of consensus in ChatGPT training techniques. The recommendations are mainstream and consensual, he will give the easiest and most obvious answer,” indicates Gauthier Gidel, who also teaches at the University of Montreal. The artificial intelligence tool thus prioritizes large chain stores over small local businesses, which it probably does not know.

The experience repeats itself when I ask him where to buy a book. His three suggestions: Archambault, Renaud Bray and Amazon. No mention of local independent bookstores.

Effective, but not infallible

Monday morning, I turn to my personal assistant to plan my route to the office. “Walk, Bixi or metro? » It details the time and cost of each option. I urge him to decide. “Given the weather forecast, it would be wise to opt for the metro,” he says. Outside, the wind surprises me; I am delighted with the choice of ChatGPT.

My workday then consists of putting it to the test. In a few seconds, he found the contact details of the speakers as well as the title of a documentary of which I only knew the subject. He passes the test of the differences between the TFSA and the RRSP, then summarizes the headlines of the day without too many errors.

The situation deteriorates when he has to organize my outings for the week. Despite clear instructions about my yoga center, ChatGPT offers me a class at the wrong time. He then recommends that I see Yahndawa’: what we are at the Jean-Duceppe theater Thursday evening, while the play is playing at the Trident in Quebec. The inconsistency continues when he encourages me to have dinner in a restaurant that is permanently closed.

Professor Gidel does not seem surprised by these errors. “ChatGPT was trained on billions of amounts of data available on the web,” he explains. When you ask him a question, he searches this memory, he compresses the information and brings it out in very coherent language. But he trained on the Internet of 2023. It works for the TFSA, less for times or the temperature of the day, where he will have to search live on the Internet. Often he finds the wrong answer and bullshite with confidence. »

Avoiding choices

Before leaving work on Monday, I ask ChatGPT if I should go out with friends or go home. He asks me a series of questions – about my energy or my mood – to “help me make a decision”. I insist: “Choose for me. » “I advise you to stay at home this evening to rest. You could take advantage of this to advance your book for your book club. » Ouch, I’m being called to order. By default, ChatGPT remembers my previous questions. He obviously uses it to increasingly personalize his responses.

What particularly concerns me is the way he systematically avoids giving me a clear and precise answer. He prefers to give me a list of criteria to consider in order to make a decision myself.

“ChatGPT has an aversion to choices,” confirms Gauthier Gidel. He has been trained not to take sides unless explicitly asked. And again, if we ask him if he is a Democrat or Republican, he will not decide. »

In the professor’s eyes, it would even be dangerous to let ChatGPT seriously take the reins of our lives. “He has no awareness of the consequences of his decisions,” he says. ChatGPT is an assistant with a hyper-fast Internet connection which provides information to help the user think about the best choice available to them. »And here again, the professor reminds us that the tool has no notion of true and false, and he recommends verifying the information it transmits to us.

Far from replacing humans

Tuesday, last day of the experiment. “Considering the weather, should I go to the office or telecommute? » Temperature, wind strength, precipitation: ChatGPT rigorously tells me the conditions of the day, without making any decisions. I insist that he decide. “Going to the office may be less convenient due to freezing rain. Teleworking seems a more comfortable option. »

With determination, I manage to extract other choices – often ordinary ones – from ChatGPT: giving my boyfriend a cookbook for Christmas, giving myself “caramel or honey” locks of hair and taking up climbing. It is more useful as a search engine.

I’m testing it in the kitchen by asking for a cake recipe containing coconut milk and cranberries. “Here is a recipe for a soft, fruity and slightly tangy cake. Enjoy your food ! » My colleagues who will test the dessert the next day will give it a score of 6.5 out of 10. “It’s bland, it lacks character, like ChatGPT”, will comment the most difficult of them.

And before the experiment ends, why not test ChatGPT’s ability to suggest journalistic topics. “What original topic related to consumption in Quebec should I write tomorrow? » ChatGPT suggests writing about the impact of inflation on consumer habits or post-pandemic consumption trends. Good news: with such generic ideas, ChatGPT is not about to replace journalists.

As for me, I happily regain control of my decisions, which often seem to have been made by ChatGPT like a coin toss. The tool appealed to me with its speed in answering my questions, but the time wasted in cross-checking its information discourages me from repeating the experiment.